Are You Being Watched?

4 By Steph Smith

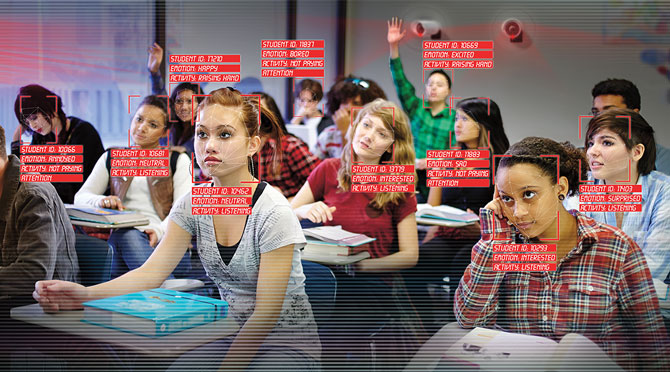

8 You’re sitting in class listening to today’s lesson when your mind starts to wander. At first, your teacher doesn’t notice you’ve stopped paying attention—but the cameras watching you do. They scan the faces of you and your classmates every second to determine how engaged you are. The devices alert your teacher, who tells you to quit daydreaming. You sit up straight and glance warily at the artificial eyes watching you.

9 This scenario may sound like science fiction, but facial recognition technology like this is actually being used in a school in China. It can detect when students are listening, answering questions, writing, interacting with one another, or asleep. A similar system tested in another Chinese school even analyzes students’ expressions to track their emotions: if they’re angry, happy, disappointed, or sad. The data collected by both systems is used to evaluate students’ class performance. Each person receives a score, which gets displayed on a screen at school and can be viewed on an app by parents, pupils, and teachers.

13 Right now, schools in the U.S. aren’t using this kind of technology to keep tabs on students’ behavior. And it may never be used for that purpose here. But some American schools are considering using facial recognition for other things, like scanning school grounds to spot people who may pose a danger to students. In fact, facial recognition is currently used mainly for security purposes. It can help police identify suspects from video footage. Stores have tested the tech to catch repeat shoplifters. Some makers of electronic devices, like doorbells with built-in cameras that identify visitors, tout facial recognition as an added safety feature.

14 Some people are embracing this high-tech trend. But it’s raising red flags for others. They believe facial recognition, which is often used without individuals’ knowledge, could violate people’s privacy. The technology has also come under fire for being inaccurate, particularly when identifying people of color. Despite these objections, the use of facial recognition is becoming more widespread, showing up in places from schools and airports to concert venues.

20 Facial recognition is just one of many types of technology today that utilize biometrics to identify people. Biometrics are measurements of physical characteristics unique to each individual. If you’ve ever used your fingerprint to unlock your cell phone, then you’ve been recognized with the help of biometrics (see Fingerprints and Beyond).

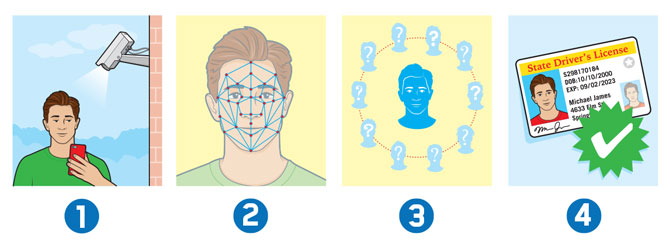

21 Anil Jain is a computer scientist and biometrics expert at Michigan State University. He investigates how facial recognition technology can benefit society. For example, Jain thinks the technology could aid in solving crimes by allowing detectives to track down suspects far more quickly than traditional methods. If a detective has a picture of a suspect, say from a surveillance camera, a facial recognition program could compare it with a database of known faces in hopes of finding a possible match (see How Facial Recognition Works). “It can do a comparison a million times a second,” says Jain. Some experts think the technology could be used in a similar way to help search for missing persons.

22 Another advantage of the technology, some say, is to simplify security screenings in high-traffic places. For instance, travelers no longer have to show their passports multiple times at the Delta Air Lines international terminal in the largest airport in Atlanta. They just look at a camera for identification and move along. Delta says this gets people through the terminal and onto planes faster.

27 In the wake of several deadly shootings at schools in the U.S., some educational institutions are interested in using facial recognition to better protect students. In 2018, officials in Lockport, New York, installed the technology in some of the city’s public schools. As of press time, the system hasn’t yet gone live. But if it does, its cameras would scan people’s faces at school entrances and in hallways. The images would be compared with a database of individuals, like suspended students, not allowed on campus. The software can also detect if someone is holding a gun. If a match is found for a face or a weapon, school officials are notified so they can decide whether taking action, like calling the police, is needed.

28 Critics, though, say there’s no evidence this technology makes schools safer. Groups that work to protect basic human rights, like privacy, have concerns too. “I’m worried about how schools are conditioning young people to expect that everything they do is going to be monitored and tracked by authorities,” says Kade Crockford. She’s the director of the Technology for Liberty Program at the American Civil Liberties Union of Massachusetts. After pushback from civil liberties groups and parents, New York’s department of education is now reviewing Lockport’s facial recognition system to determine how it should best be used.

29

30 Using facial recognition to assess student behavior is another point of controversy. Outside of China, this technology has mainly been used for online courses. Cameras in students’ computers monitor their faces and eye movements as they watch an instructor remotely. The software then notifies the teacher when students aren’t paying attention and generates quizzes so they can review material they may have missed. The program’s creators believe this will help students do better on tests and also help instructors refine their lessons to make them more engaging.

31 Some watchdog groups, though, are critical of facial recognition programs that attempt to read how people are feeling based on their expressions. They say the technology isn’t backed by enough scientific research and is too simplistic, given the complexity of human emotions.

32 HOW

33 FACIAL

34 RECOGNITION

35 WORKS

48 Another problem with facial recognition is how often it makes errors. The technology is far more accurate at recognizing people than it used to be—but not everyone. It has a harder time correctly identifying women of color and sometimes fails to notice them at all. Researchers at the Massachusetts Institute of Technology Media Lab have helped highlight these flaws. One of their studies used software to analyze photos of famous African American women, including former first lady Michelle Obama and tennis star Serena Williams. The result: The program incorrectly labeled them as men.

49 These issues exist because all people—including facial recognition software developers—have unconscious biases, or ingrained stereotypes, that affect their behavior, according to Meredith Broussard, an artificial intelligence expert at New York University. Facial recognition software relies on artificial intelligence—a computer’s ability to perform tasks normally associated with human intelligence—to learn to recognize faces. But the databases of images used by the software have typically contained more white people than those of color. “People who created this didn’t notice, because they were mostly white men,” Broussard says.

50 One way to improve the situation is to make the teams working on facial recognition software more diverse. Until that happens, Broussard worries, biases in the technology could have serious real-world consequences, such as leading police to pursue the wrong suspects or mistakenly arrest someone who’s innocent.

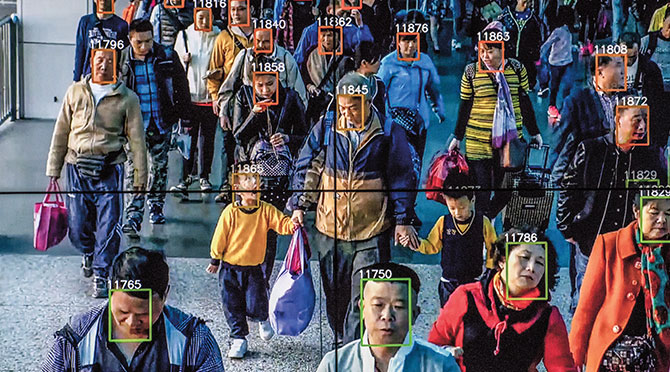

55 Civil liberties groups have strongly opposed the use of facial recognition by law enforcement agencies, as well as in places like schools and airports. In addition to concerns over privacy and accuracy, these groups worry about the U.S. becoming a surveillance state—a country that closely monitors its citizens.

56 That’s already happening in China. Its government uses facial recognition cameras to create a giant surveillance network that not only curtails crime but also keeps citizens in line. Police, for example, use the technology to publicly shame people caught committing minor crimes. If people cross the street illegally, police can identify them using facial recognition and post their information on giant electronic billboards to embarrass them.

57

58 In the U.S., some police departments also tested facial recognition to gather information about people on the streets. They claim it’s to identify individuals wanted by the police. New York is testing a system to combat terrorism that scans people crossing bridges and tunnels into New York City. While those kinds of actions are meant to make people feel safe, they can have the opposite effect.

59 Being watched all the time can cause people to be fearful, says Jeramie D. Scott, a senior lawyer at the Electronic Privacy Information Center in Washington, D.C. Fear, he argues, can pose a serious threat to basic rights, such as freedom of speech. For instance, if the government could use facial recognition to identify protesters, people might be less likely to openly object to policies. “Being anonymous allows freedom of thought,” says Scott. “It lets you not worry about every single thing you do being scrutinized by an authority figure.”

60 FINGERPRINTS

61 AND

62 BEYOND

80 As facial recognition becomes more widely used, many people want to ensure the technology won’t be abused. They’re calling on lawmakers to create rules to protect people’s rights. A few states already have regulations to safeguard consumers. They prevent businesses, like stores or device manufacturers, from collecting or selling facial recognition data without customers’ permission. Some cities have banned or are considering banning the technology’s use by government agencies, like police departments.

81 Meredith Broussard, the artificial intelligence expert, believes the facial recognition debate is part of a larger discussion. She says people need to think more critically about technology. Too often, says Broussard, people believe it can solve everything. But technology has limits to what it can do, and it might be the wrong tool for certain problems. Broussard offers this advice to young people: “Educate yourselves about technology so that you can empower yourselves to create the kind of world that you want to live in.”

82 WEIGHING

83 THE

84 EVIDENCE

0 General Document comments

3 Sentence and Paragraph comments

0 Image and Video comments

This type of technology and methods in school will make students hate school even more. It could cause anxiety and fear in students.

New Conversation

Hide Full Comment

I get that people are danger to some but I feel like you can tell when they are. People should not use technology to scan people to see if they are dangerous.

New Conversation

Hide Full Comment

Using facial recognition and advanced technology for safety is a lot more reasonable than using it to make sure students are attentive. This is for the safety of students and will make school more safe.

New Conversation

Hide Full Comment

General Document Comments 0